Air Pollution

Data set details

| Data set description: | Air pollution data collected in the UK |

| Source: | UK AIR - Air Information Resource |

| Details on the retrieved data: | \(\text{PM}_{2.5}\) concentration in the UK throughout 2020. Data retrieved from the Automatic Urban and Rural Network. |

| Spatial and temporal resolution: | Yearly air pollution data measured in specific sites across the UK. |

Introduction

In this tutorial, we will see how to extract and work with a data set from the Department for Environment Food & Rural Affairs of the Government of the United Kingdom that measures the air pollution level across its territory. From the Data page, one can download data grouped by many different characteristics. However, to make it easier, we will use the openair package to retrieve the UK air pollution data.

Installing the openair package

One can easily install it by running the following code

if (!require(openair)) {

install.packages("openair")

library(openair)

}Alternatively, one can also refer to the the openair’s GitHub page, and install it via the devtools package.

Retrieving data

From the UK air pollution website, one can choose to work with many different Monitoring Networks. For this tutorial, we will use data from the Automatic Urban and Rural Network (AURN). In particular, we will study the concentration of \(\text{PM}_{2.5}\) (tiny particles that are two and one half microns or less in width) in the air \((\mu\text{g}/\text{m}^3)\) throughout the year 2020.

To do this let’s also use the tidyverse package.

library(tidyverse)Checking the available data

Firstly, one can check which sort of data is available for each Monitoring Network by using the ìmportMeta() function.

meta_data <- importMeta(source = "aurn", all = TRUE)

head(meta_data, 3)## # A tibble: 3 x 13

## code site site_type latitude longitude variable Parameter_name

## <chr> <chr> <chr> <dbl> <dbl> <chr> <chr>

## 1 ABD Aberdeen Urban Background 57.2 -2.09 O3 Ozone

## 2 ABD Aberdeen Urban Background 57.2 -2.09 NO Nitric oxide

## 3 ABD Aberdeen Urban Background 57.2 -2.09 NO2 Nitrogen dioxide

## # ... with 6 more variables: start_date <dttm>, end_date <chr>,

## # ratified_to <dttm>, zone <chr>, agglomeration <chr>, local_authority <chr>In the above code, we selected data from the AURN. Alternatively, one could have selected data from The Scottish Air Quality Network (saqn), Northern Ireland Air Quality Network (ni), etc. For an extensive list, refer to its documentation in ?importMeta. The parameter all is optional and makes the function return all data if set to TRUE.

Now, we can filter the sites that measure the pollutant of interest (\(\text{PM}_{2.5}\)). We can do this using the following snippet of code

selected_data <- meta_data %>%

filter(variable == "PM2.5")

head(selected_data, 3)## # A tibble: 3 x 13

## code site site_type latitude longitude variable Parameter_name

## <chr> <chr> <chr> <dbl> <dbl> <chr> <chr>

## 1 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5 PM2.5 particu~

## 2 ABD9 Aberdeen Erroll Pa~ Urban Ba~ 57.2 -2.09 PM2.5 PM2.5 particu~

## 3 ACTH Auchencorth Moss Rural Ba~ 55.8 -3.24 PM2.5 PM2.5 particu~

## # ... with 6 more variables: start_date <dttm>, end_date <chr>,

## # ratified_to <dttm>, zone <chr>, agglomeration <chr>, local_authority <chr>Downloading the selected data

Now that we have checked which data is available, one can download the desired data from the AURN by using the importAURN() function. Other networks can be accessed by using functions described here.

If one checks the importAURN() documentation, they will see that we can use the site, year and pollutant arguments. We will set them appropriately to download the desired data. As a remark, just notice that the site parameter has to be a list of the sites’ code written in lowercase. Therefore,

selected_sites <- selected_data %>%

select(code) %>%

mutate_all(.funs = tolower)

selected_sites## # A tibble: 121 x 1

## code

## <chr>

## 1 abd

## 2 abd9

## 3 acth

## 4 bple

## 5 bel2

## 6 birr

## 7 agrn

## 8 birm

## 9 bmld

## 10 bir1

## # ... with 111 more rowsFinally, we can download the data as follows

data <- importAURN(site = selected_sites$code, year = 2020, pollutant = "pm2.5")

head(data, 5)## # A tibble: 5 x 4

## date pm2.5 site code

## <dttm> <dbl> <chr> <chr>

## 1 2020-01-01 00:00:00 35.9 Aberdeen ABD

## 2 2020-01-01 01:00:00 5.82 Aberdeen ABD

## 3 2020-01-01 02:00:00 3.68 Aberdeen ABD

## 4 2020-01-01 03:00:00 3.07 Aberdeen ABD

## 5 2020-01-01 04:00:00 3.99 Aberdeen ABDNotice that, as we are download data from the servers, the above command can take some time to run.

Now, we can put together the information stored in the meta_data and data variables to have a final and filtered data set that we can work with. To do this, we will use the right_join() function (see its documentation here) in the following way

filtered_data <- meta_data %>%

filter(variable == "PM2.5") %>%

right_join(data, "code") %>%

select(date, pm2.5, code, site.x, site_type, latitude, longitude, variable) %>%

rename(site = site.x)

head(filtered_data, 5)## # A tibble: 5 x 8

## date pm2.5 code site site_type latitude longitude variable

## <dttm> <dbl> <chr> <chr> <chr> <dbl> <dbl> <chr>

## 1 2020-01-01 00:00:00 35.9 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5

## 2 2020-01-01 01:00:00 5.82 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5

## 3 2020-01-01 02:00:00 3.68 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5

## 4 2020-01-01 03:00:00 3.07 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5

## 5 2020-01-01 04:00:00 3.99 ABD Aberdeen Urban Ba~ 57.2 -2.09 PM2.5Saving the filtered data

To avoid having to download and filter the data every time, we can save it in a .csv file.

write_csv(filtered_data, "filtered_data.csv")Working with the downloaded data

If one saved the selected data in another R session, the first thing they have to do is loading the appropriate .csv file.

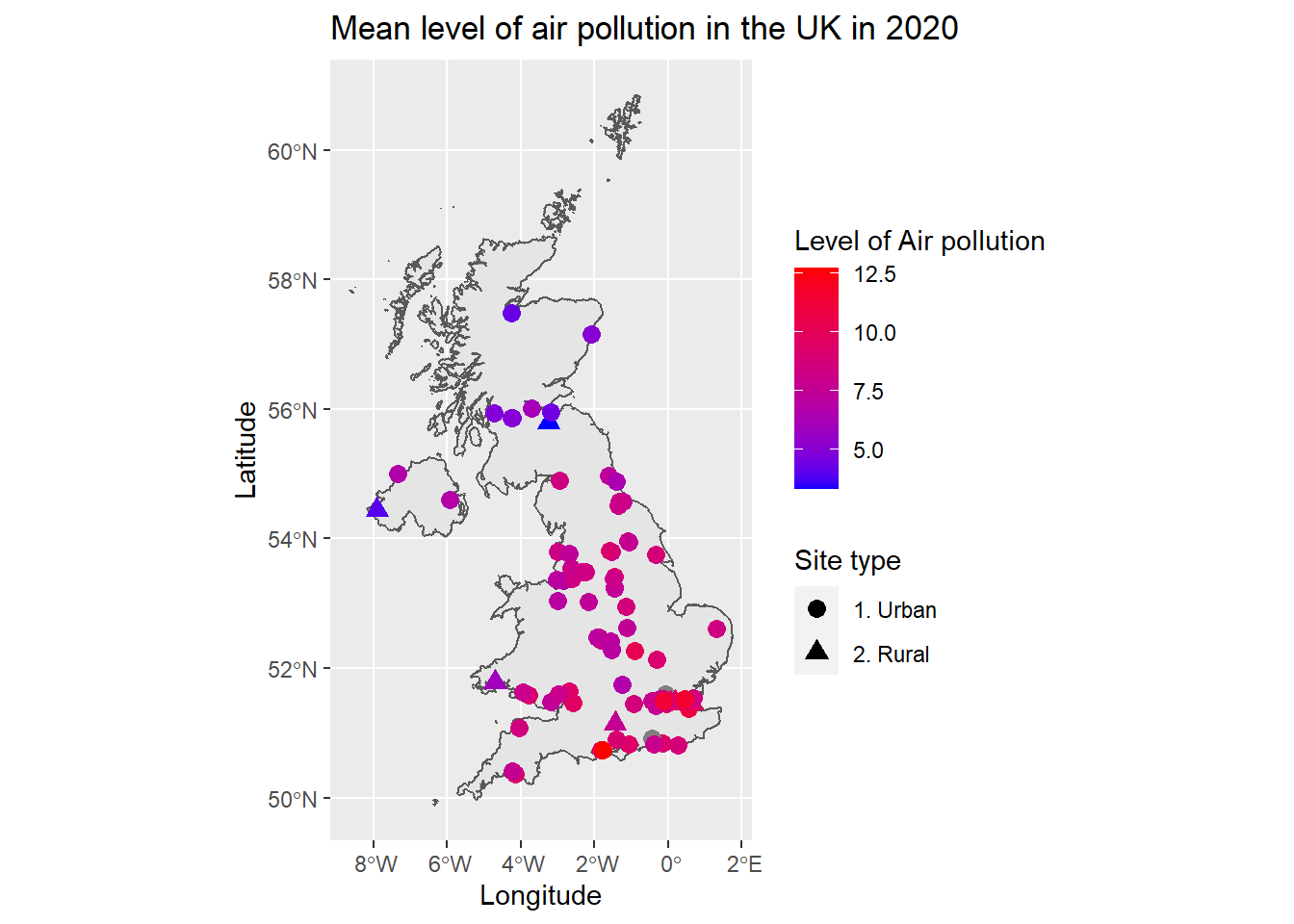

filtered_data <- read_csv(file = "filtered_data.csv")One thing that we can do with the data is visualizing where the selected sites are. To do this, we can refer back to the Administrative Boundaries tutorial.

But first, let’s summarize all the downloaded data by taking the average of the \(\text{PM}_{2.5}\) measurements in each site. Remark: If one wants to fit a model with a temporal component, this simplification may not be applied, since we are losing information doing this. However, for the purposes of this tutorial, we will keep it simple.

all_sites <- filtered_data %>%

group_by(site) %>%

summarize(mean = mean(pm2.5, na.rm = TRUE), latitude = first(latitude), longitude = first(longitude), site_type = first(site_type))

head(all_sites, 5)## # A tibble: 5 x 5

## site mean latitude longitude site_type

## <chr> <dbl> <dbl> <dbl> <chr>

## 1 Aberdeen 5.02 57.2 -2.09 Urban Background

## 2 Auchencorth Moss 3.33 55.8 -3.24 Rural Background

## 3 Barnstaple A39 8.44 51.1 -4.04 Urban Traffic

## 4 Belfast Centre 6.82 54.6 -5.93 Urban Background

## 5 Birmingham A4540 Roadside 8.20 52.5 -1.87 Urban TrafficFrom the above output, we can see that the sites are also classified according to their type. In real-world applications, one may be interested in working only with the Rural Background sites; in this case, we can simply apply a filter to the all_sites data.

all_rural_data <- all_sites %>%

filter(site_type == "Rural Background")

head(all_rural_data, 3)## # A tibble: 3 x 5

## site mean latitude longitude site_type

## <chr> <dbl> <dbl> <dbl> <chr>

## 1 Auchencorth Moss 3.33 55.8 -3.24 Rural Background

## 2 Chilbolton Observatory 7.60 51.1 -1.44 Rural Background

## 3 Lough Navar 3.96 54.4 -7.90 Rural BackgroundAlternatively, we can group the Urban Background, Urban Industrial and Urban Traffic sites, and the Rural Background and Suburban Background sites into Urban and Rural classes, respectively.

transform_site_type <- function(site_type) {

result <- c()

for (i in (1:length(site_type))) {

if ((site_type[i] == "Urban Background") | (site_type[i] == "Urban Industrial") | (site_type[i] == "Urban Traffic")) {

result <- c(result, "1. Urban")

} else {

result <- c(result, "2. Rural")

}

}

result

}

all_sites <- all_sites %>%

mutate(new_site_type = transform_site_type(site_type))

head(all_sites)## # A tibble: 6 x 6

## site mean latitude longitude site_type new_site_type

## <chr> <dbl> <dbl> <dbl> <chr> <chr>

## 1 Aberdeen 5.02 57.2 -2.09 Urban Backgr~ 1. Urban

## 2 Auchencorth Moss 3.33 55.8 -3.24 Rural Backgr~ 2. Rural

## 3 Barnstaple A39 8.44 51.1 -4.04 Urban Traffic 1. Urban

## 4 Belfast Centre 6.82 54.6 -5.93 Urban Backgr~ 1. Urban

## 5 Birmingham A4540 Roadside 8.20 52.5 -1.87 Urban Traffic 1. Urban

## 6 Birmingham Acocks Green 7.88 52.4 -1.83 Urban Backgr~ 1. UrbanNow, using the rgeoboundaries package (in addition to the ggplot from tidyverse), we can plot the map for all sites as follows

library(rgeoboundaries)

# Download the boundaries

uk_boundary <- geoboundaries(country = "GBR")

# Plot the map

ggplot(data = uk_boundary) +

geom_sf() +

geom_point(data = all_sites, aes(x = longitude, y = latitude, shape = new_site_type, color = mean), size = 3) +

scale_color_gradient(name = "Level of Air pollution", low = "blue", high = "red") +

scale_shape_discrete(name = "Site type") +

ggtitle("Mean level of air pollution in the UK in 2020") +

labs(x = "Longitude", y = "Latitude")

References

- UK air pollution website: https://uk-air.defra.gov.uk/

openairpackage website: https://davidcarslaw.github.io/openair/- The

openairbook: https://bookdown.org/david_carslaw/openair/ tidyversepackage website: https://www.tidyverse.org/rgeoboundariesrepository: https://github.com/wmgeolab/rgeoboundaries

Last updated: 2023-01-07

Source code: https://github.com/rspatialdata/rspatialdata.github.io/blob/main/air_pollution.Rmd

Tutorial was complied using: (click to expand)

## R version 4.0.3 (2020-10-10)

## Platform: x86_64-w64-mingw32/x64 (64-bit)

## Running under: Windows 10 x64 (build 18363)

##

## Matrix products: default

##

## locale:

## [1] LC_COLLATE=English_United States.1252

## [2] LC_CTYPE=English_United States.1252

## [3] LC_MONETARY=English_United States.1252

## [4] LC_NUMERIC=C

## [5] LC_TIME=English_United States.1252

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] forcats_0.5.1 stringr_1.4.0

## [3] dplyr_1.0.4 purrr_0.3.4

## [5] readr_2.1.2 tidyr_1.1.4

## [7] tibble_3.1.6 tidyverse_1.3.1

## [9] openair_2.9-1 leaflet_2.1.1

## [11] ggplot2_3.3.5 rgeoboundaries_0.0.0.9000

##

## loaded via a namespace (and not attached):

## [1] nlme_3.1-149 fs_1.5.2 sf_1.0-7

## [4] bit64_4.0.5 lubridate_1.8.0 httr_1.4.2

## [7] RColorBrewer_1.1-2 tools_4.0.3 backports_1.4.1

## [10] bslib_0.3.1 utf8_1.1.4 R6_2.5.0

## [13] KernSmooth_2.23-17 DBI_1.1.2 mgcv_1.8-33

## [16] colorspace_2.0-3 withr_2.5.0 tidyselect_1.1.0

## [19] bit_4.0.4 curl_4.3.2 compiler_4.0.3

## [22] rvest_1.0.2 cli_3.2.0 xml2_1.3.2

## [25] labeling_0.4.2 triebeard_0.3.0 sass_0.4.0

## [28] scales_1.1.1 classInt_0.4-3 hexbin_1.28.2

## [31] rappdirs_0.3.3 digest_0.6.27 rmarkdown_2.11

## [34] jpeg_0.1-9 pkgconfig_2.0.3 htmltools_0.5.2

## [37] dbplyr_2.1.1 fastmap_1.1.0 highr_0.9

## [40] maps_3.4.0 readxl_1.3.1 htmlwidgets_1.5.4

## [43] rlang_1.0.2 rstudioapi_0.13 httpcode_0.3.0

## [46] jquerylib_0.1.4 farver_2.1.0 generics_0.1.2

## [49] jsonlite_1.8.0 vroom_1.5.7 crosstalk_1.2.0

## [52] magrittr_2.0.1 s2_1.0.7 Matrix_1.2-18

## [55] Rcpp_1.0.7 munsell_0.5.0 fansi_0.4.2

## [58] lifecycle_1.0.1 stringi_1.5.3 yaml_2.2.1

## [61] MASS_7.3-53 grid_4.0.3 parallel_4.0.3

## [64] crayon_1.5.1 lattice_0.20-41 haven_2.5.0

## [67] splines_4.0.3 mapproj_1.2.8 hms_1.1.1

## [70] knitr_1.33 pillar_1.7.0 reprex_2.0.1

## [73] crul_1.2.0 wk_0.5.0 glue_1.6.2

## [76] evaluate_0.15 latticeExtra_0.6-29 modelr_0.1.8

## [79] hoardr_0.5.2 png_0.1-7 vctrs_0.3.8

## [82] tzdb_0.3.0 urltools_1.7.3 cellranger_1.1.0

## [85] gtable_0.3.0 assertthat_0.2.1 cachem_1.0.6

## [88] xfun_0.30 broom_0.8.0 countrycode_1.2.0

## [91] e1071_1.7-4 class_7.3-17 memoise_2.0.1

## [94] units_0.8-0 cluster_2.1.0 ellipsis_0.3.2

Corrections: If you see mistakes or want to suggest additions or modifications, please create an issue on the source repository or submit a pull request Reuse: Text and figures are licensed under Creative Commons Attribution CC BY 4.0.